1. What is Machine Learning (ML)?

- Machine Learning is a type of technology where computers are trained to make decisions or predictions based on data, without being explicitly programmed for each task. The computer learns patterns in the data and gets better over time.

- Examples of machine learning include things like:

- Recommending videos on YouTube.

- Detecting spam emails.

- Recognizing faces in photos.

2. What is a Hardware Accelerator?

- A hardware accelerator is a specialized piece of computer hardware designed to speed up specific tasks or operations. In the context of machine learning, accelerators are hardware components that help process the complex calculations required for training and running machine learning models much faster than regular computer hardware like a standard CPU (Central Processing Unit).

3. Why Do We Need Machine Learning Hardware Accelerators?

- Machine learning models, especially deep learning models (a type of ML), require a lot of calculations to process and analyze data. These calculations are often too complex and time-consuming for regular CPUs, which are general-purpose processors.

- To train models faster or make predictions (inferences) in real-time, we need specialized hardware that can handle these tasks more efficiently.

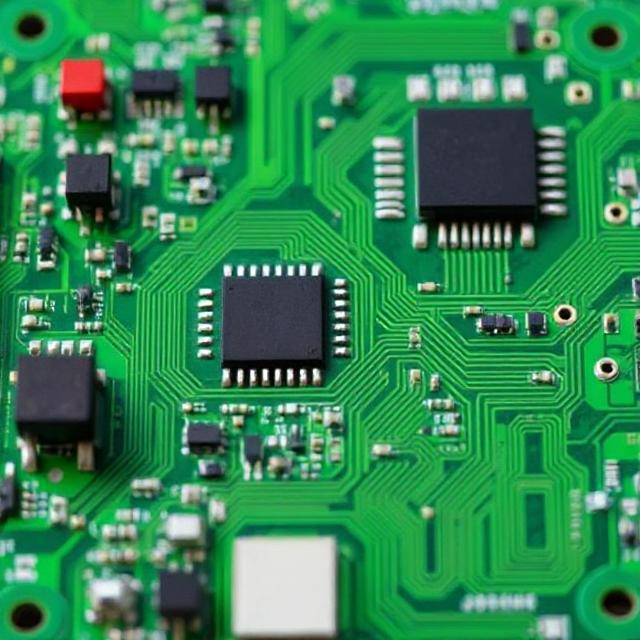

4. Types of Machine Learning Hardware Accelerators:

A. Graphics Processing Units (GPUs)

- GPU stands for Graphics Processing Unit. Originally, GPUs were designed for rendering images and videos on your screen, but they are also great at performing the parallel processing needed in machine learning.

- Unlike a CPU, which is good at doing one thing at a time (sequential processing), a GPU can handle many calculations at once (parallel processing). This is perfect for the type of operations used in machine learning, like matrix calculations and vector operations.

- GPUs are widely used in training deep learning models because they are much faster than CPUs at processing large amounts of data simultaneously.

B. Tensor Processing Units (TPUs)

- A TPU is a type of accelerator created by Google specifically for machine learning workloads.

- TPUs are designed to efficiently run machine learning models, especially deep learning models that require a lot of computation.

- TPUs are optimized for tensor processing, which is a core part of machine learning, particularly for deep learning algorithms. Tensors are just multi-dimensional arrays (like matrices or vectors) that hold data in a format that machine learning algorithms can understand.

- TPUs are faster than GPUs for certain tasks, especially for training large deep learning models, and are used in Google’s cloud services to power AI applications.

C. Field-Programmable Gate Arrays (FPGAs)

- An FPGA is a type of hardware that can be customized to perform specific tasks. Unlike GPUs or TPUs, which are designed with certain tasks in mind, FPGAs are flexible and can be reprogrammed to perform different operations.

- FPGAs are very efficient for real-time machine learning inference (making predictions) because they can be tailored to a specific ML model, providing high performance and low latency.

- However, FPGAs are a bit harder to work with than GPUs or TPUs, as they require custom programming and setup.

D. Application-Specific Integrated Circuits (ASICs)

- ASICs are custom-built chips designed for a specific application, in this case, machine learning.

- An ASIC designed for ML is highly optimized to perform specific calculations needed for training or running ML models at high speed and efficiency.

- One of the most famous examples of an ML ASIC is Google’s TPU, which is a specific type of ASIC created for machine learning tasks.

5. How Do Machine Learning Hardware Accelerators Help?

- Speed: ML models, especially deep learning models, can take a long time to train. Hardware accelerators speed up the process, enabling faster training and real-time predictions. For example, tasks that might take hours on a CPU could be completed in minutes on a GPU or TPU.

- Efficiency: Accelerators are designed to handle the specific types of operations used in machine learning much more efficiently than general-purpose processors. This means they can do more work using less power and fewer resources.

- Parallel Processing: Machine learning tasks often involve performing similar calculations many times (like matrix multiplications). Hardware accelerators like GPUs can perform these operations in parallel, meaning they do them all at once, rather than one after another, making them much faster.

- Real-Time Inference: Once a model is trained, hardware accelerators are used to make real-time predictions. For example, in autonomous vehicles, a trained ML model uses a hardware accelerator to process sensor data (like camera images) in real time to make driving decisions.

6. Why is Machine Learning Hard for Regular CPUs?

- CPUs are designed to be general-purpose processors, meaning they can handle a wide variety of tasks, but they aren’t specialized for any one task. They can handle sequential processing, but when it comes to handling huge amounts of data and performing many calculations at once, CPUs are not as fast as specialized hardware.

- ML tasks, especially deep learning, involve doing the same types of calculations on large datasets repeatedly. This is a parallel task (doing many things at once), which GPUs, TPUs, and FPGAs are much better suited for than CPUs.

7. Examples of Machine Learning Hardware Accelerators in Action

- Training Deep Learning Models: When companies like Google or Facebook train massive machine learning models for tasks like image recognition or natural language processing, they use GPUs or TPUs to speed up the training process.

- Autonomous Vehicles: Self-driving cars use hardware accelerators to process sensor data in real time. They need to make decisions quickly and accurately, so they rely on GPUs or FPGAs to analyze data from cameras, radar, and lidar sensors.

- Voice Assistants: Virtual assistants like Amazon Alexa or Google Assistant use hardware accelerators for real-time speech recognition. They take your spoken words, process them with ML models, and respond almost instantly.

8. Summary of Machine Learning Hardware Accelerators:

| Hardware Type | Best For | Pros | Cons |

|---|---|---|---|

| GPU (Graphics Processing Unit) | General ML tasks, especially deep learning | High parallel processing power, widely available | Can be power-hungry, expensive |

| TPU (Tensor Processing Unit) | Deep learning, especially for training large models | Extremely fast for ML, highly optimized for TensorFlow | Only available in Google Cloud |

| FPGA (Field-Programmable Gate Array) | Custom ML tasks, real-time inference | Flexible, customizable for specific tasks | Requires complex programming |

| ASIC (Application-Specific Integrated Circuit) | Highly specific ML applications | Very fast and power-efficient | Expensive to design and manufacture |

Conclusion:

- Machine learning hardware accelerators like GPUs, TPUs, FPGAs, and ASICs are specialized hardware designed to speed up the complex calculations involved in machine learning.

- These accelerators enable faster training of models, real-time predictions, and efficient processing of large datasets.

- Each type of accelerator has its own strengths and is suited for different tasks, but they all help make machine learning faster and more powerful, enabling technologies like self-driving cars, voice assistants, and more